Gradient Descent for Linear Regression Explained, Step by Step

Gradient descent is one of the most famous techniques in machine learning and used for training all sorts of neural networks. But gradient descent can not only be used to train neural networks, but many more machine learning models. In particular, gradient descent can be used to train a linear regression model! If you are curious as to how this is possible, or if you want to approach gradient descent with smaller steps and not jump straight to neural networks, this post is for you. You will learn how gradient descent works from an intuitive, visual, and mathematical standpoint and we will apply it to an exemplary dataset in Python.

Share on:

Background image by Rohit Tandon (link)

Outline

You have most likely heard about gradient descent before. But maybe you still have some questions as to how it really works. Maybe you never found an intuitive and visual explanation for the math behind it, or maybe you never found clean Python code that implements it. If this situation feels familiar, then this article is exactly for you! Here, we’ll go through gradient descent step by step and apply it to linear regression. We’ll take a look at the intuition, the math, and the code behind gradient descent and we’ll also visualize what’s going on under the hood to fully understand this algorithm and learn to use it to it’s full potential.

With that being said, I will assume that you are familiar with linear regression. If you are not, then I would highly recommend you read Linear Regression Explained, Step by Step before coming back to this article. In that article, you will learn everything you need to know about how linear regression works and how you can use it. This post can be considered a sequel to the article about linear regression since I will use some terms and functions that were defined in the post about linear regression. So even if you feel somewhat comfortable with linear regression, I’d highly recommend at least skimming over the linked article.

Since gradient descent is in essence, just math, this post will be more math-heavy than some of my other posts. But do not worry, we will take very small steps and I will make sure to explain every piece of an equation so that you know exactly what we are doing. As long as you know basic high school-level calculus, you are going to be alright. Of course, we are also going to implement gradient descent in raw Python as well as with the help of scikit-learn. And if you have read any of the other articles on this website before, you know that the visual aspect won’t fall short either! So let’s get started!

Formulating the Problem

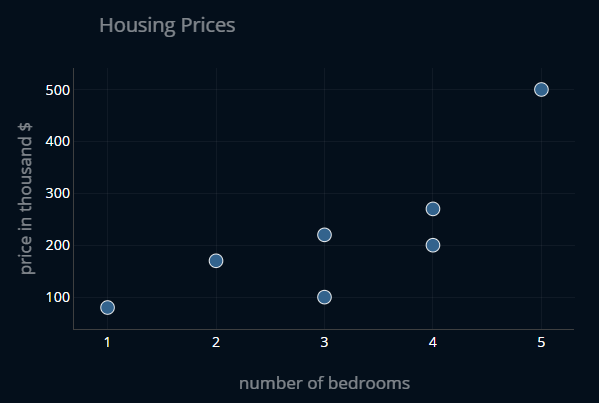

So what exactly are we trying to do? Say we have a small dataset where we are given the number of bedrooms in a certain house and we want to predict the price of said house.

We now want to somehow predict the price of any new house that enters our dataset. To do this, we create a linear function that has a minimal mean squared error (or MSE) with regard to our data points.

Once a new point enters our dataset, we simply plug in the number of bedrooms of our house into our function and we receive the predicted price for that dataset.

The Intuition

Instead of directly computing the ideal values for our variables, we instead approach them step by step. In a nutshell, gradient descent starts with a random function and continuously improves it until it can no longer be improved.

I think Aurélien Géron describes a great analogy for this method in his book “Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow”, so I will share it with you here.

Imagine you are on the side of a hill and you want to get to the valley. But the air is very foggy. So foggy, that you can only see the ground you are standing on and nothing more. But you can still feel the slope of the hill, right? So by taking very small and cautious steps in the downward direction, you will eventually reach the valley. This is in essence how gradient descent works.

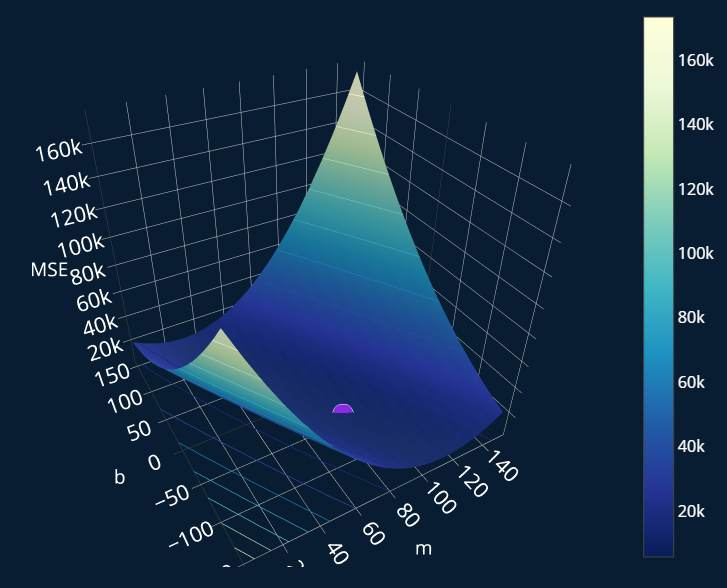

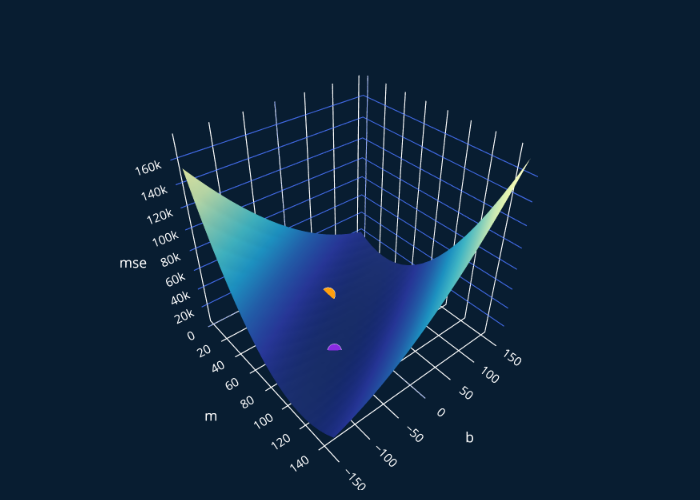

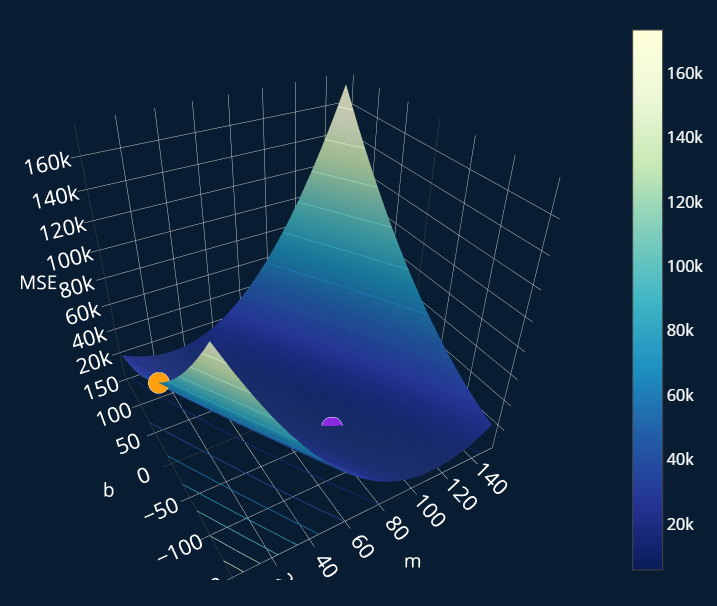

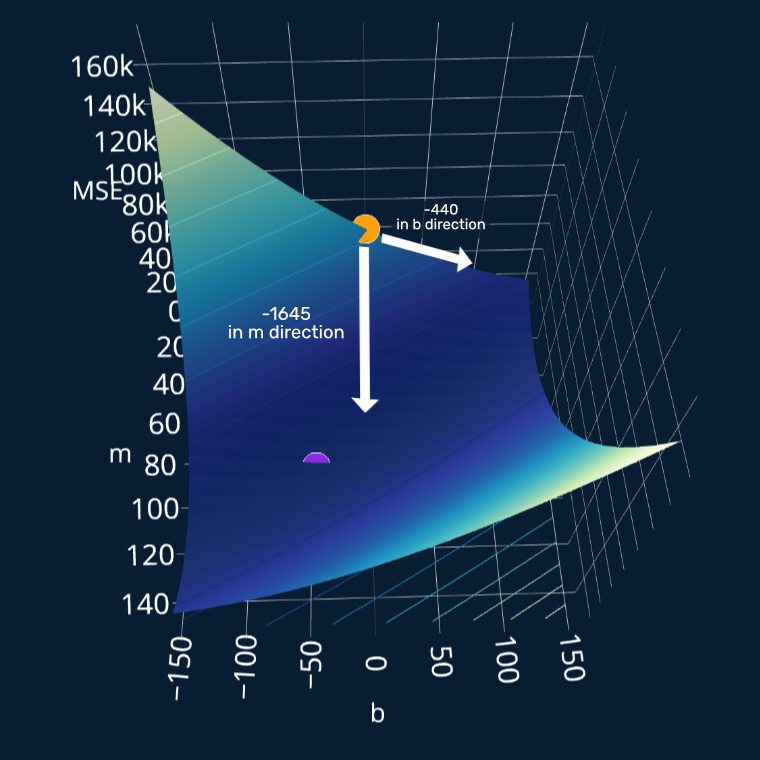

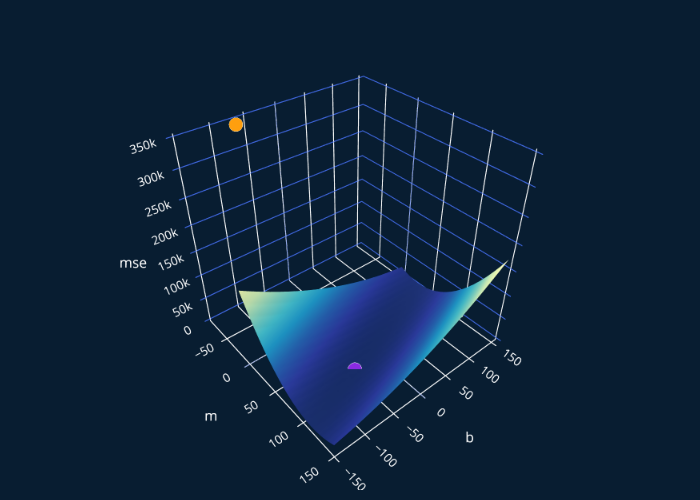

Now let’s apply this to our problem. We can create a three-dimensional plot where we plot the MSE (z-axis) for every (x-axis) and every (y-axis). So let’s take a look:

The purple point is the global minimum of our MSE. The question now is, how do we get to this point? We know that we want to approach the minimum iteratively, so our end result should look something like this:

We have now covered the intuition, so let’s now slowly approach the math behind gradient descent.

The Math

Let’s start with the function that always returns zero.

In other terms, our is 0 and our is 0. If we were to plug into our MSE, we would get:

If we would also plug in our data points, we would get that the MSE for our function is ~. This means that the predictions of our function are off by about 255.000$ on average.

The Gradient

Our MSE tells us how well (or rather how poorly) our function fits our data. This means that we want to make our MSE as low as possible. Our MSE is just a function, so if you have taken a calculus class before you might recall that we can compute the minimum of it directly by taking the first derivative of the MSE and setting it equal to 0.

So if we have a function we can derive (or differentiate) it once and we get:

This differentiation was done with respect to our variable . This way of writing down the derivative is called Lagrange’s notation, named after Joseph Louis Lagrange. We can also use what’s called Leibniz’s notation, named after Gottfried Wilhelm Leibniz. With this our derivative now looks like this:

With the Leibniz notation, we have to explicitly write out the variable in regard to which we want to derive our function to.

But our MSE depends on two variables, not one. So how can we derive a function with two variables? Well, we can compute what’s called a partial derivative. This sounds fancy but it really just means: treat one variable as a variable and every other variable as a constant.

So if our function looks like this: , we can now no longer calculate just one single derivative. Instead, we can calculate two partial derivatives. One with respect to , and one with respect to . We can write this down like this:

We now use the symbol instead of to signal that we are taking a partial derivative.

When we differentiate with respect to , we treat as a constant and vice versa. This is how we can calculate partial derivatives. So let’s now calculate the partial derivatives of our MSE with respect to and our MSE with respect to .

If you do the math, you will get something along the lines of:

which we can simplify to:

The parts colored in blue are equal to .

What we just calculated is the gradient of our function! The gradient for a function is denoted with the symbol

If we now plug in our values for and , namely 0 and 0, and we also explicitly write out our data values, we get:

which is equal to:

And with that, we have computed the gradient for our function , great! Now that the gradient-part is done, we can move to the descent-part!

The Descent

If we think back to the analogy with the hill and the fog, our final goal was to get to the valley. But because of the fog, we can only see what is directly below our feet. We can feel the slope of the hill. In our case, the 3d-plot of the MSE is our hill and the slope is represented by our gradient. In order to move, we have to increase or decrease the values of our parameters and

The derivative of a function gives us the slope of . In our case, the first value of the gradient (~ -1645) is negative. Ideally, we want this value to be 0, because that would mean we don’t need to move anymore and thus have reached our minimum. Right now this value is negative, which means that the current slope of our MSE with respect to is negative. This means that to get closer to our minimum, we have to increase . Why? Let’s consider a more simple example.

Visualizing in 2D

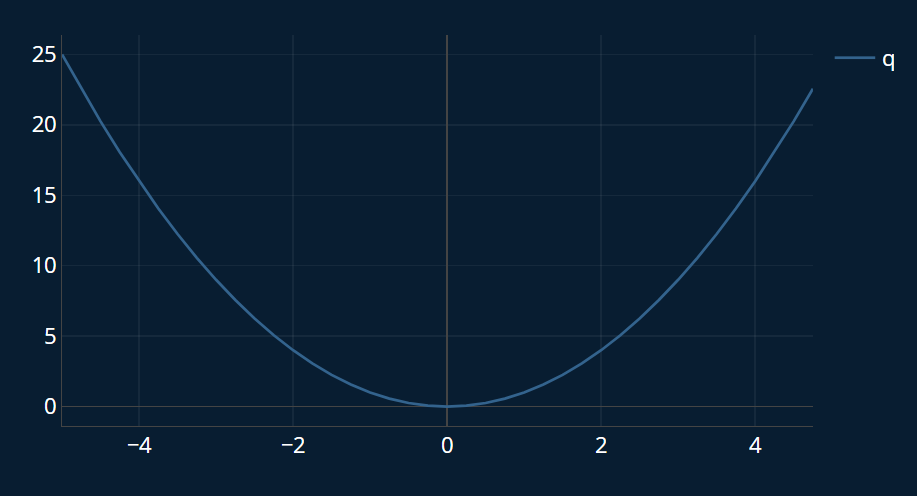

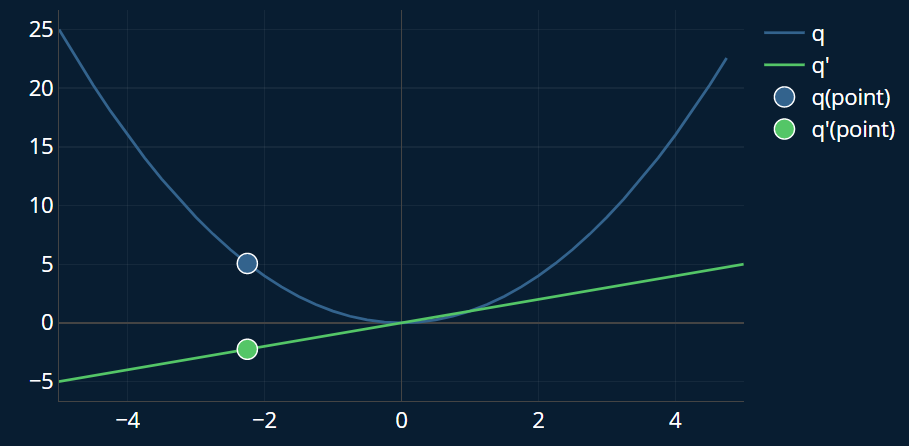

Imagine we have this simple quadratic function , which looks like this:

Now we want to find its minimum. We derive the function and we get . There is only one derivative because we only have one variable. Now we can overlay and . Let’s imagine as our imaginary “2D-hill” for a moment, and choose a random starting position, like this one:

Ok, so how do we know which direction we need to move towards to reach the minimum of our simplified “2D-hill”? Our derivative at this point is -2.25.

Our minimum is at the point where our derivative is zero, i.e. the point where . So we always want to go towards this point.

If we move towards the right, i.e. if we increase , we get closer to the point where . If we would be standing on the opposite side of our hill, our derivative would be positive. In that case, we would have to decrease to get closer to our minimum.

So for every point on the green line (the derivative), if it is below 0 (i.e. if our derivative is negative), we have to increase our variable. And if it is above zero (i.e. our derivative is positive), we have to decrease it. In other words, we just subtract our derivative from our variable. If our derivative is negative, the minuses will cancel out and it will be added instead.

But our derivative not only tells us which direction we should move towards, it also tells us how large our steps should be. If the absolute value of our derivative is high, meaning it is high in the negatives or high in the positives, then our steps can be larger. Similarly, if our derivative is close to zero, we will only perform small steps. In other words, if the slope below our feet is very steep, we can cover more distance with a single step than if our slope was very shallow.

We can draw imaginary arrows to visualize the direction and size of our steps at any point based on our derivative:

So the important points to remember are:

- positive derivative -> reduce

- negative derivative -> increase

- high absolute derivative -> large step

- low absolute derivative -> small step

Now let’s go back to our original example.

Visualizing in 3D

In the case of our gradient, the first value tells us how to adjust and the second one tells us how to adjust . Let’s first recall the visualization of the MSE (our “hill”) and let’s also add in the point we are currently at as well as the minimum:

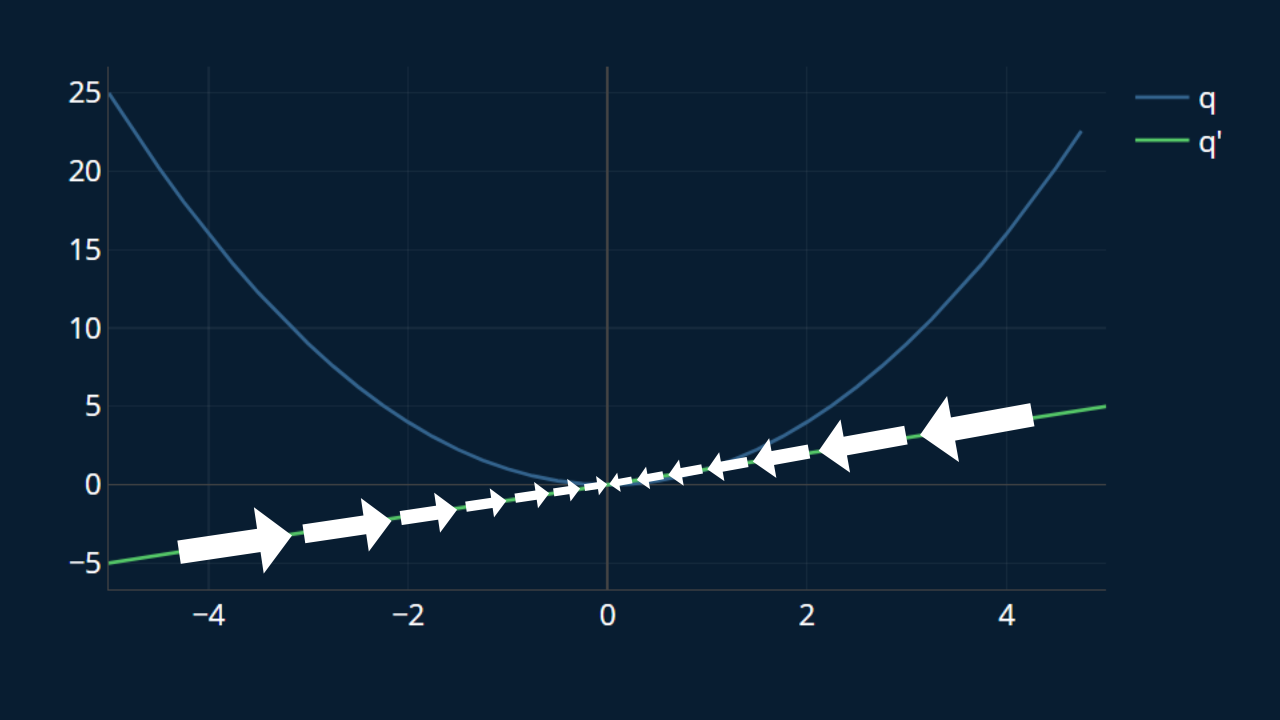

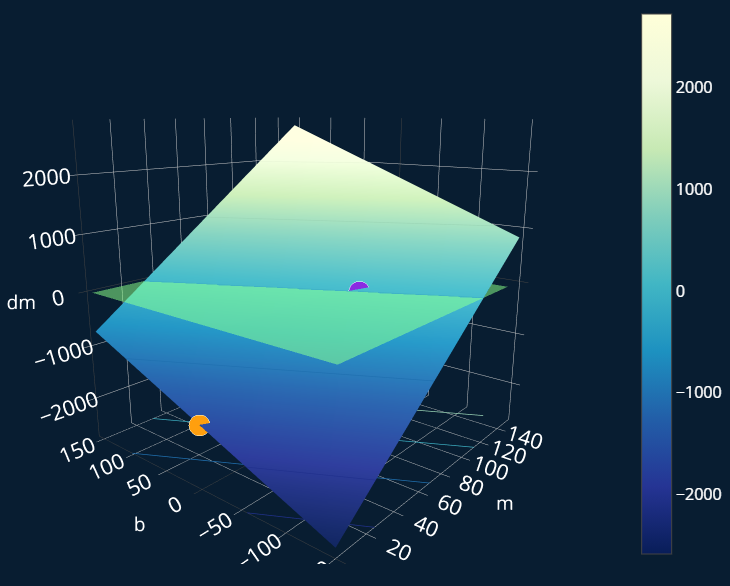

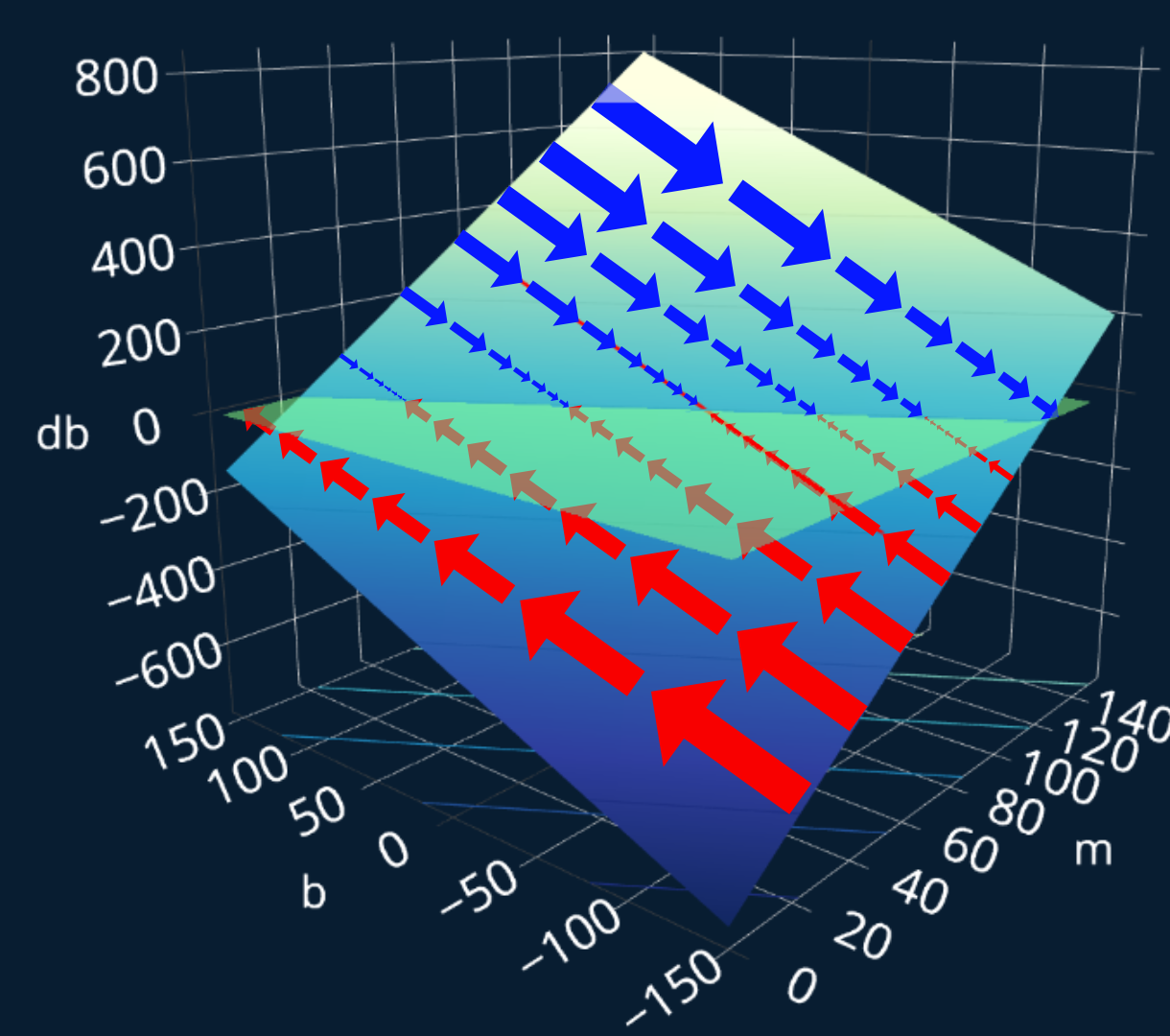

Great! The orange point displays our current position. Now we can visualize the partial derivative of our MSE with respect to in the same way, and we will also display our two points as well:

As you may notice, this looks very similar to our simplified example with and ! Here we have three variables, so we want to get to the “point” where . But because we are in three dimensions, there isn’t just one point where this is true, there is an entire plane where . So to make the plot a bit easier to interpret, I also added in a greenish plane at .

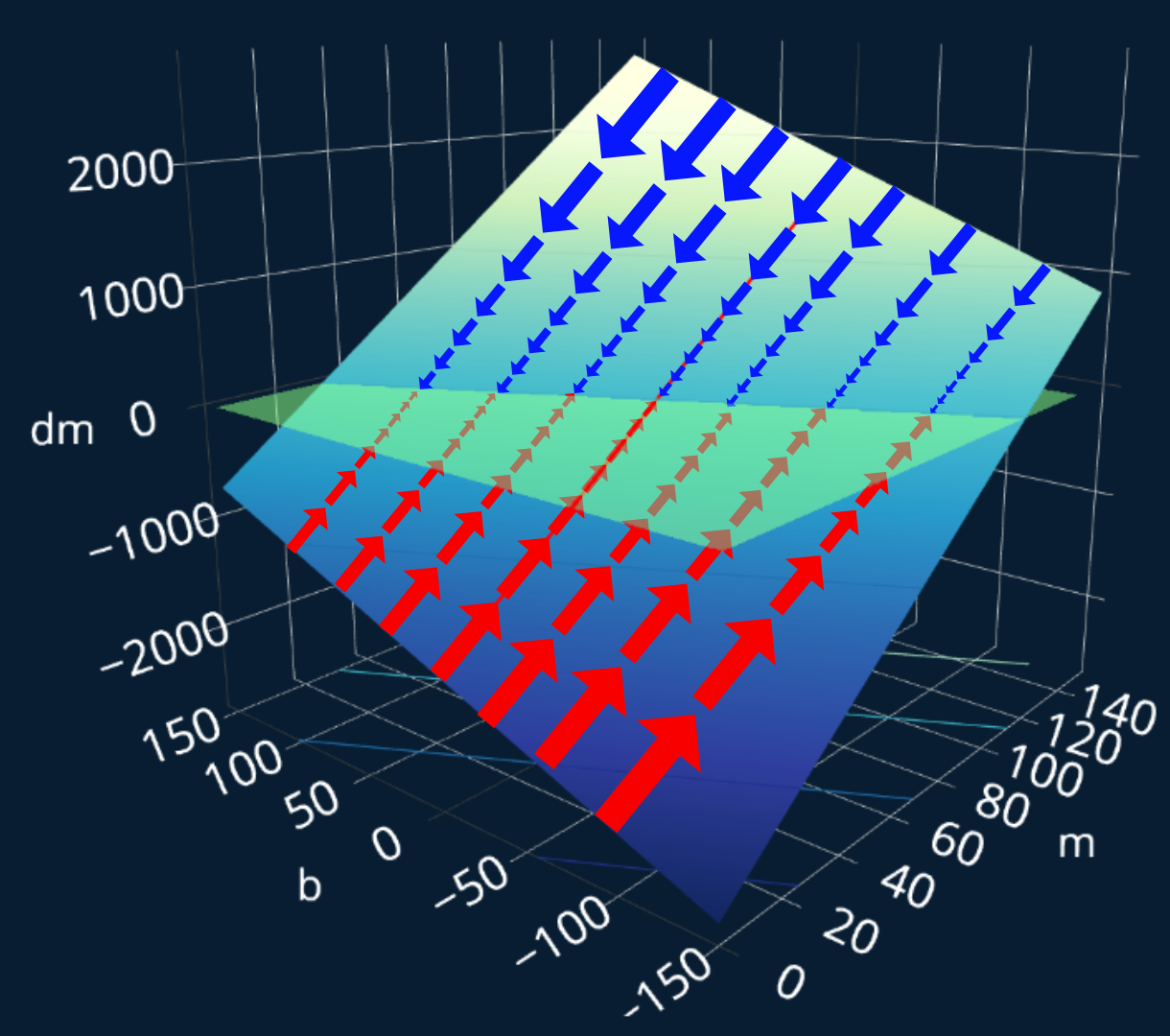

We can now apply the same logic we used in our 2d-example. Currently, we only care about adjusting . So for every point on this plane, if it is above this slightly transparent greenish plane, we need to decrease . Similarly, if it is below the plane, we have to increase . We can once again draw our imaginary arrows to visualize the direction and size of our steps:

As you see, right now we only move across the x-axis (or in our case, the -axis), because we only want to know how we need to adjust .

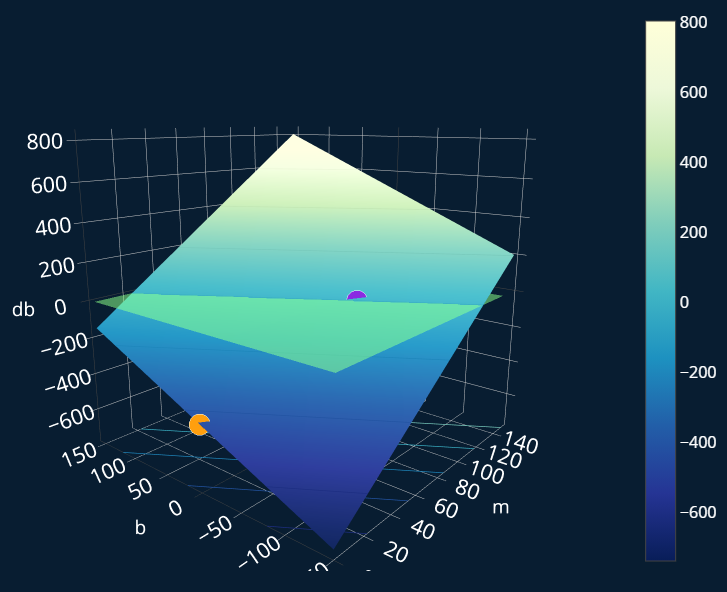

We can also create a similar 3d-plot for the partial derivative of our MSE with respect to to see how we need to adjust b:

And we can again draw some imaginary arrows to visualize the steps we would have to take at any particular point:

This again looks very familiar. Our gradient was this:

so now we know that we need to increase both our and our . But we need to increase our a lot more than we need to increase our ! This makes sense because the slope of our “MSE-hill” at our current point is steeper in the -direction than it is in the -direction. If you have been following this post very closely, you might notice that something is off.

A Mistake..?

Let’s see how our step would change our position on the hill:

Indeed, the slope in -direction is steeper than the one in -direction. And indeed, the arrow in -direction leads us towards our minimum. But wait! The arrow in -direction leads us away from our minimum! What?! Have we done something wrong? Isn’t gradient descent supposed to take us to the minimum, not away from it? Well, no, we did not do anything wrong and yes, gradient descent seems to take us in the wrong direction. But our derivatives are correct and we have also not made any other mistakes up until this point. But why are we moving in the wrong direction, then?

Let’s take a closer look at the slope in -direction. It really does look like it is leading down towards the right in the above image. Meaning there could be a minimum there. Gradient descent just doesn’t know if there is one. Right now, it seems like a valid option because the slope points us towards this side of the plot. But once we perform a couple more steps of gradient descent, we will soon see that the slope in -direction begins to change and we do indeed “turn around” and start moving in the right direction again. So right now there isn’t really anything we can do to “correct” our movements, because we don’t know where the minimum really is, or at least, our program does not know so. Only we have this fancy 3d-visualization where we can see that we are moving in the wrong direction. But gradient descent does not see the issue, at least not now. In our case, this is no big deal. We only have one minimum, which means that we will find it sooner or later. But in scenarios where we have more than one minimum, gradient descent can actually get stuck in a local minimum, and not get out. But this is beyond the scope of this article. For now, everything is alright and we will soon see that gradient descent will still find our minimum, it might just need a few extra steps to do so.

Introducing: The Learning Rate

Ok, so we now understand how and why we need to adjust our parameters. But by how much should we adjust them? Should we really increase our by 1645? No, that would be way too much! If we were to increase our by 1645, we would completely overshoot our minimum and land on the other side of our plot. Instead, we choose a so-called learning rate. Our gradient is then multiplied by our learning rate before we apply it to our parameters. This learning rate is a number between 0 and 1. 0 means we do not change our values at all and 1 means we subtract the entirety of our gradient. Common values for a learning rate are usually in the range of . If we choose a learning rate of 0.001, we would update our values like this:

Our new values would now be:

So we update our function:

We can add a little at the bottom to show that this is our function after one iteration of performing gradient descent.

If we calculate the MSE for our new function, we get ~62362.30 ! It’s improved (before it was ~)!

Now we do this over and over again until we cannot improve our MSE any further. In practice, you will almost never achieve an MSE of 0, so it’s a good idea to look at how much our MSE improves every iteration, and once we make little to no improvements, we can usually stop. This technique in particular even has a name and it’s called early stopping.

We have now learned how the math behind gradient descent works and how we can start with some random function and iteratively improve it until we end up with our ideal function. Now, let’s also implement this algorithm in Python.

The Code

In essence, we want to define a function gradient_descent that takes in our data ( and ),

our variables ( and ), our minimization criterion (in our case this will be the MSE,

since we want to minimize that) and performs gradient descent to find the values for our variables that minimize the criterion.

We also need to pass in a learning rate and the number of iterations (commonly called epochs) we want to perform gradient descent for.

dataset = np.array([(1,80),(2,170),(3,100),(3,220),(4,200),(4,270),(5,500)])x = dataset[:,0]y = dataset[:,1]def add_intercept_ones(X):intercept_ones = np.ones((len(X),1)) # results in array( [ [1],..,[1] ] )X_b = np.c_[intercept_ones,X]return X_bX_b = add_intercept_ones(X)

Adjustment: Vectorization

Since we could have more than just one feature (the number of bedrooms), we might have a function with more variables. If we wanted to predict the price of a house based on the number of bedrooms, the year it was built in and the average house price of the city the house stands in, our function would look more like this:

To make our life easier, we will vectorize our function and instead write:

If you are asking yourself where the went, what this ”” in means, or if our function really remained the same, I encourage you to read the section about vectorization in the post about linear regression, where I explain this step in a lot more detail.

Code Outline

So the skeleton of our function should look something like this:

def gradient_descent(x, y, theta, criterion, number_of_iterations, alpha):for i in range(number_of_iterations):# perform one step of gradient descentreturn ideal_theta

We want to perform number_of_iterations many gradient descent-steps, which is why we added the for-loop. Now let’s perform gradient descent step by step.

Making Predictions

First, we have to calculate the predictions of our model for . We can do this by defining a higher order function that takes in and and creates our linear function for us, which it then returns. This would look like this:

def create_function(theta):def f(X_b):return np.dot(X_b,theta)return f

We can then use our function like this:

f = create_function(theta)y_predicted = f(X_b)

Note that we could also just write np.dot(X_b,theta) directly,

instead of creating this higher order-function,

but this way we can more easily reuse our code if we wanted to create a more complicated function.

Calculating the Loss

Now we need to calculate our loss (the MSE). Let’s recall what our vectorized MSE looks like

And now let’s translate it into (vectorized) code:

def mse(y, y_predicted):error = y-y_predictedloss = 1/(y.size) * np.dot(error.T, error)return loss

Note that since error is just a vector containing all of the residuals,

np.dot(error.T, error) is just a shortcut for the dot product of error with itself.

This results in the same output as if we were to calculate each residual

individually, square them up and add them together.

If you are still unsure about this, I recommend you implement this function both

vectorized and non-vectorized and then compare them to each other.

Now we can calculate our loss like this:

loss = mse(y, y_predicted)

Optimizing our Variables

The last step which we have to perform is to optimize our variables. First, we have to compute the gradient of our MSE. For this, we need our two partial derivatives. Let’s recall what they look like:

We can implement our derivatives like this:

def mse_derivative_m(X,y,y_predicted):return -(2/y.size) * sum(X * (y - y_predicted))def mse_derivative_b(y,y_predicted):return -(2/y.size) * sum(y - y_predicted)

Note that since we do not multiply our X_b with theta here, we need to use our regular X instead of Xb.

Now we can compute our gradient in just one line of code:

gradient = [mse_derivative_m(x,y,y_predicted),mse_derivative_b(y,y_predicted)]

Once we have our gradient, we can adjust our and our :

m -= learning_rate * gradient[0] #adjust mb -= learning_rate * gradient[1] #adjust b

Let’s now put all of this into our main function:

def gradient_descent(x, y, theta, criterion, number_of_iterations, learning_rate):X_b = add_intercept_ones(X)for i in range(number_of_iterations):# predict and calculate lossf = create_function(theta) # create the current functiony_predicted = f(X_b) # predict our entire xloss = criterion(y,y_predicted) # calculate the error# perform optimizationgradient = np.array([mse_derivative_b(y,y_predicted), mse_derivative_m(x,y,y_predicted)]) # calculate gradienttheta = theta - learning_rate * gradient #adjust m and breturn theta

Great! Let’s try it out:

theta = np.array([0.,0.])num_epochs = 25learning_rate = 0.001ideal_theta = gradient_descent(X, y, theta, mse, num_epochs, learning_rate)

If we then inspect our ideal theta we get:

>>>print(ideal_theta)array([ 8.19030548, 31.03371126])

It’s certainly getting closer to our minimum! Our minimum lies at the point where and and . As you see, is moving in the right direction, but is still moving the wrong way.

We can now calculate the MSE for our ideal function:

ideal_function = create_function(ideal_theta)y_pred = f(X_b)print(mse(y,y_pred))

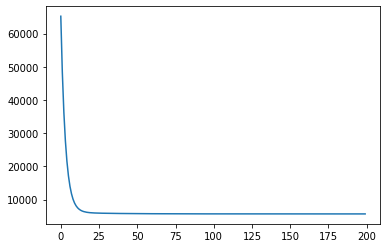

which returns us a value of ~23223 ! Awesome! But it’s not exactly at the minimum yet. If we adjust our learning rate a bit and train our model longer, we do eventually reach the minimum. F.e. with a learning rate of 0.075 and 200 training epochs, we reach a loss of ~5692, which is very close to our minimum. Feel free to experiment with the values a bit and see if you find a more optimal configuration. If you do, definitely let me know in the comments below!

Some Adjustments

Let’s now make a few improvements to our function. Wouldn’t it be cool if we could track the loss of our function across every epoch? We can do this by creating a new list and always appending the current loss to it. Also, we can print the current loss every 10 or so epochs!

def gradient_descent(x, y, theta, criterion, number_of_iterations, learning_rate):X_b = add_intercept_ones(X)loss_history=[]for i in range(number_of_iterations):# predict and calculate lossf = create_function(theta) # create the current functiony_predicted = f(X_b) # predict our entire xloss = criterion(y,y_predicted) # calculate the errorloss_history.append(loss)# perform optimizationgradient = np.array([mse_derivative_b(y,y_predicted), mse_derivative_m(x,y,y_predicted)]) # calculate gradienttheta = theta - learning_rate * gradient #adjust m and bif i%10==0:print("Current Epoch: {}, Current Loss: {}".format(i,loss))return theta,loss_history

We now execute gradient descent once again:

theta = np.array([0.,0.])num_epochs = 200learning_rate = 0.0075ideal_theta, loss_history = gradient_descent(X, y, theta, mse, num_epochs, learning_rate)

Now we can plot our loss across the iterations:

import matplotlib.pyplot as pltplt.plot(np.arange(num_epochs),loss_history)

which gives us the following plot:

Nice!

If you want to add some additional features to the implementation, here are a few ideas:

- Return the history of thetas in addition to the history of losses

- Add functionality to save/load your (ideal) function

- Implement early stopping

If you want to make sure you understood everything in this article, try to implement one or two of these features!

Scikit-Learn

We can also use the popular library scikit-learn to solve our linear regression problem using

gradient descent. For this, we can use the SGDRegressor-class.

Scikit-learn expects our x to be two-dimensional (since, in most cases, we will have more than one feature), so we’ll also redefine our x to be two-dimensional:

The code looks like this:

X = np.array([[1], [2], [3], [3], [4], [4], [5]])

Then, we use the SGDRegressor-class like this:

from sklearn.linear_model import SGDRegressorsgdreg = SGDRegressor(learning_rate="constant",eta0=0.0075,max_iter=200,random_state=42) #eta0 is our initial learning ratesgdreg.fit(X,y)

And we can then inspect our parameters like this:

>>>print(sgdreg.coef_,sgdreg.intercept_)[68.95520002] [12.285233]

Now you might notice that this variant does not directly converge to our minimum, which is because scikit-learn does a bit more under the hood than our own implementation. It may look like our implementation is faster, but in truth scikit-learn’s implementation is a lot more general and implements a variety of other aspects like a regularization term to reduce the risk of overfitting. Apart from that scikit-learn starts with random values for the parameters instead of starting with 0, which has some benefits, but in this particular case can result in more epochs being needed to reach the minimum. But this article is getting pretty long and I don’t want to make this even longer, so for now, this should suffice. If you want, you can also play around a bit with the individual parameters and see which ones have the strongest effect on the final model parameters.

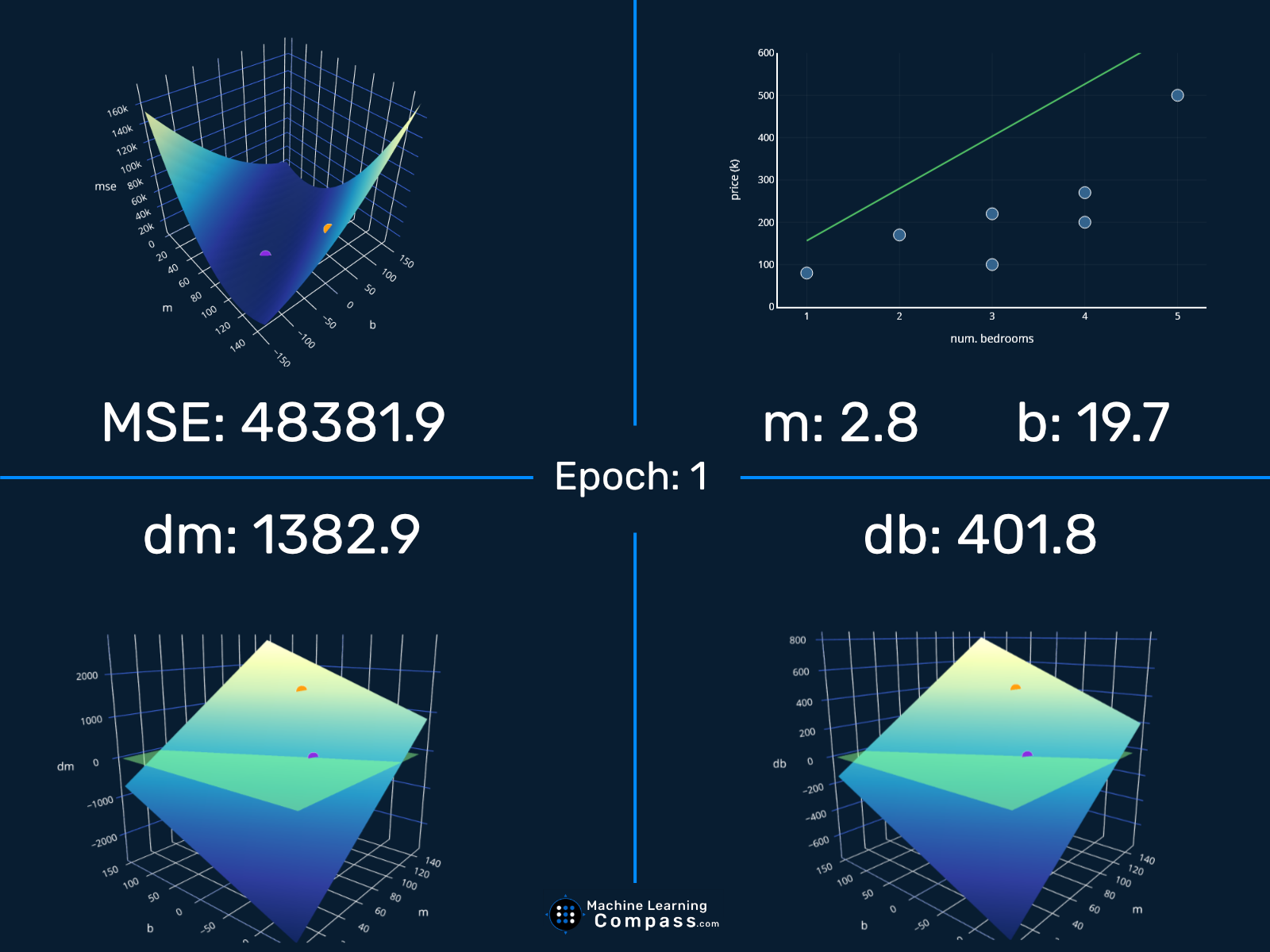

Visualizing Gradient Descent

Now that we have covered gradient descent in detail, let’s have some fun and look at a complete visualization of the process, shall we? We can visualize the MSE as well as our function and both partial derivatives for every epoch. As you may have noticed from the loss history above, the loss takes a deep dive in the first few epochs and then decreases only slowly. So to keep the visualization fluid, I will show the first 10 epochs and then only every 15th epoch after that. We will display our current function/state at that particular epoch with the familiar orange and the optimal point in a familiar purple. Putting all this together, we get:

Cool! Now you might notice a few things in the top left animation.

Looking at the Details

First of all, our point seems to jump around a bit too much. It first starts off “to the left” of the minimum, then jumps and is then located to the “right of it”, then it again jumps to the left side, and so on. When we created our initial visualization in the section The Intuition, this process looked a bit cleaner and our orange friend did not jump around this much.

Usually, this “jumping around” is not what we want. This is a clear sign that our learning rate is too high and our parameters may overshoot the minimum. In our particular case, this does not end up being a big problem, because our function still gets to our minimum pretty quickly. However, if we were to just sliiightly increase our learning rate, say to 0.08, we would get a problem. Because in that case, we overshoot our minimum so much that we actually end up “jumping out” of it:

At this point, you might ask, “so how can we pick a good learning rate?“. Great question! In fact, this is such a good question that I decided to dedicate an entire post to answer this question and go over the different techniques to choose and adapt your learning rate, and look at the effects different learning rates have on your model. I highly recommend you read that article after reading this one, it blends in really well with the content from this post!

With this, you have now learned how to use gradient descent to iteratively solve linear regression. We’ve looked at the problem from a mathematical as well as a visual aspect, and we’ve translated our understanding of it into working code, once using just Python and once with the help of scikit-learn. If you have any feedback on this post, I’d love to hear your thoughts in the comments below! These articles take many hours to create and it makes me truly happy to see people give both praise and critique so that I can make sure these posts do their intended job well: help you become better at machine learning!

Further Reading

If you’ve made it to the end, good job! Pat yourself on the back, you deserve it. Gradient descent surely isn’t the easiest algorithm in machine learning, but if you approach it step by step it is a lot less scary than only seeing the final equation with no explanation.

If you can’t get enough of linear regression, I recommend you read the post Lasso and Ridge Regression Explained, Step by Step next, where you will learn everything about lasso and ridge regression, which are variations of linear regression that solve some of the issues vanilla linear regression has.

If you are more interested in gradient descent and want to continue right where this post ends, you should read the article How To Choose Your Learning Rate, where we will take a detailed look at the issues we saw at the end of this article. You will learn about all of the different techniques for choosing and adapting learning rates, so you’ll never have to worry about picking random learning rates again!

Share on: